打开微信,使用扫一扫进入页面后,点击右上角菜单,

点击“发送给朋友”或“分享到朋友圈”完成分享

本文主要描述了对开源yolox模型进行星空体育平台的移植步骤(下)

参考实现:https://github.com/Megvii- Detection/YOLOX

截至本文完成,使用 commit 74b637b494ad6a968c8bc8afec5ccdd7ca6b544f

接上篇:【YOLOX 部署】开源yolox模型进行星空体育200平台的移植(上篇)

2.4 demo 修改

推理 demo 改动主要就是量化执行

MLU 推理过程:

融合模式:被融合的多个层作为单独的运算(单个 Kernel)在 MLU上运⾏。根据⽹络中的层是否可以被融合,⽹络被拆分为若⼲个⼦⽹络段。 MLU 与 CPU 间的数据拷⻉只在各个⼦⽹络之间发⽣。

逐层模式:逐层模式中,每层的操作都作为单独的运算(单个 Kernel)在 MLU 上运⾏,⽤⼾可以将每层结果导出到 CPU 上,⽅便⽤⼾进⾏调试。

一般来说,在线逐层模式更适用于调试环节,在线融合模式可以查看网络融合情况;

主要改动在 tools/demo.py 文件

首先需要引入 mlu 相关包

import torch_mlu import torch_mlu.core.mlu_model as ct import torch_mlu.core.mlu_quantize as mlu_quantize

关于量化函数地相关介绍,可以参考第三节 https://developer./index/curriculum/expdetails/id/10/classid/8.html

这里定义了了一个辅助函数,对网络进行量化,并保存量化后的权重,有了量化后的权重就可以保存下来供后续使用了

def quantize(model, imgs, quant_type, quant_model_name):

mean = [0, 0, 0]

std = [1.0 / 255.0 , 1.0 / 255.0, 1.0 / 255.0]

qconfig = {'iteration' : 1, 'mean': mean, 'std': std, 'firstconv' : True}

quantized_model = mlu_quantize.quantize_dynamic_mlu(

model, qconfig, dtype=quant_type, gen_quant=True)

for img in imgs:

pred = quantized_model(img)

torch.save(quantized_model.state_dict(), quant_model_name)接下来我们改写 inference 函数中相关代码,引入 mlu 推理步骤

cpu 推理:

这里cpu 推理增加了 176 行,因为我们修改了 decode_inference 为 False, 所以 cpu 也统一在外面执行,保持和 mlu 一致。

174 t0 = time.time()

175 cpu_outputs = self.model(img)

176 self.model.head.decode_outputs(cpu_outputs, dtype=cpu_outputs.type())

177 # if self.decoder is not None:

178 # cpu_outputs = self.decoder(cpu_outputs, dtype=cpu_outputs.type())

179 cpu_outputs = postprocess(

180 cpu_outputs, self.num_classes, self.confthre,

181 self.nmsthre, class_agnostic=True

182 )

183 logger.info("CPU Infer time: {:.4f}s".format(time.time() - t0))

184 print("cpu outputs: ", cpu_outputs[0])在线逐层推理:

这里主要调用 mlu 接口 torch_mlu.core.mlu_quantize.quantize_dynamic_mlu 对模型进行量化,

197 行使用量化后的模型进行推理

188 quantize(self.model, [img], args.quant, quant_path)

189 state_dict = torch.load(quant_path)

190 ct.set_core_number(int(args.core_num))

191 ct.set_core_version(args.mlu_device.upper())

192 quantized_net = torch_mlu.core.mlu_quantize.quantize_dynamic_mlu(self.model)

193 quantized_net.load_state_dict(state_dict,strict=False)

194 quantized_net.eval()

195 quantized_net.to(ct.mlu_device())

196 t1 = time.time()

197 pred = quantized_net(img.half().to(ct.mlu_device()))

198 mlu_outputs = pred.cpu().float()

199 self.model.head.decode_outputs(mlu_outputs, dtype=mlu_outputs.type())

200 # if self.decoder is not None:

201 # mlu_outputs = self.decoder(mlu_outputs, dtype=mlu_outputs.type())

202 mlu_outputs = postprocess(

203 mlu_outputs, self.num_classes, self.confthre,

204 self.nmsthre, class_agnostic=True

205 )

206 logger.info("MLU by Infer time: {:.4f}s".format(time.time() - t1))

207 print("mlu output: ", mlu_outputs[0])

208 np.allclose(mlu_outputs[0].detach().numpy(), cpu_outputs[0].detach().numpy())保存离线模型:

如果要运行在线融合模式,需要在运行前向过程前调用jit.trace()接口生成静态图。首先会对整个网络运行一遍逐层模式,同时构建一个静态图;然后对静态图进行优化(包括去除冗余算子、小算子融、数据块复用等)得到一个优化后的静态图;之后会根据输入数据的设备类型进行基于设备的优化,生成针对当前设备的指令。

jit.trace 之后可以使用 save_as_cambricon 保存离线模型供后续使用。

209 # save cambricon offline model

210 if True:

211 ct.save_as_cambricon("./offline_models/"+args.name+"_"+args.quant+"_core"+args.core_num+"_"+args.mlu_device)

212 torch.set_grad_enabled(False)

213 trace_input = torch.randn(1, 3, 640, 640, dtype=torch.float)

214 trace_input=trace_input.half()

215 trace_input=trace_input.to(ct.mlu_device())

216 quantized_net_jit = torch.jit.trace(quantized_net, trace_input, check_trace = False)

217 pred = quantized_net_jit(trace_input)

218 ct.save_as_cambricon("")融合推理:

225 行使用融合模型进行推理。

221 sum_time = 0.0

222 # infer using offline model

223 for i in range(16):

224 inference_start = time.time()

225 pred = quantized_net_jit(img.half().to(ct.mlu_device()))

226 # print("mlu inference time: ", i, " ", time.time() - inference_start)

227 mlu_outputs = pred.cpu().float()

228 #print(mlu_outputs.shape)

229 self.model.head.decode_outputs(mlu_outputs, dtype=mlu_outputs.type())

230 # if self.decoder is not None:

231 # mlu_outputs = self.decoder(mlu_outputs, dtype=mlu_outputs.type())

232 mlu_outputs = postprocess(

233 mlu_outputs, self.num_classes, self.confthre,

234 self.nmsthre, class_agnostic=True

235 )

236 once_time = time.time() - inference_start

237 np.allclose(mlu_outputs[0].detach().numpy(), cpu_outputs[0].detach().numpy())

238 sum_time = sum_time + once_time

239 # print("mlu e2e time:", i, " ", once_time)

240 logger.info("MLU FUSION AVG Infer time: {:.4f}s", sum_time/16)另外,由于修改了 Focus 源码,demo.py 在权重读取的时候也需要加载一下新增的 conv 权重(详情见【YOLOX 部署】(上篇))

360 # load the model state dict

361 # model.load_state_dict(ckpt["model"])

362 # 修改权值文件,为新添加的卷积增加权值数据。

363 weight = [[1.,0.,0.,0.],[0.,0.,0.,0.],[0.,0.,0.,0.],

364 [0.,0.,0.,0.],[1.,0.,0.,0.],[0.,0.,0.,0.],

365 [0.,0.,0.,0.],[0.,0.,0.,0.],[1.,0.,0.,0.],

366 [0.,0.,1.,0.],[0.,0.,0.,0.],[0.,0.,0.,0.],

367 [0.,0.,0.,0.],[0.,0.,1.,0.],[0.,0.,0.,0.],

368 [0.,0.,0.,0.],[0.,0.,0.,0.],[0.,0.,1.,0.],

369 [0.,1.,0.,0.],[0.,0.,0.,0.],[0.,0.,0.,0.],

370 [0.,0.,0.,0.],[0.,1.,0.,0.],[0.,0.,0.,0.],

371 [0.,0.,0.,0.],[0.,0.,0.,0.],[0.,1.,0.,0.],

372 [0.,0.,0.,1.],[0.,0.,0.,0.],[0.,0.,0.,0.],

373 [0.,0.,0.,0.],[0.,0.,0.,1.],[0.,0.,0.,0.],

374 [0.,0.,0.,0.],[0.,0.,0.,0.],[0.,0.,0.,1.]]

375 ckpt["backbone.backbone.stem.space_to_depth_conv.weight"] = torch.from_numpy(np.array(weight).reshape(12,3,2,2).astype(np.fl oat32))

376 model.load_state_dict(ckpt)

377

378 logger.info("loaded checkpoint done.")demo.py 完整的改动上传在此处:

安装 yolox:

python setup.py develop #安装 yolox

新建一个目录存放离线模型

mkdir offline_models

执行脚本

python tools/demo.py image -n yolox-s -c weights/yolox-s-unzip.pth --path assets/dog.jpg --conf 0.25 --nms 0.45 --tsize 640 --save_result --device cpu -q int8 -cn 16 -md mlu270

注意:这里使用【YOLOX 部署】(上篇)中使用高版本 pytorch 导出的权重文件:

weights/yolox-s-unzip.pth

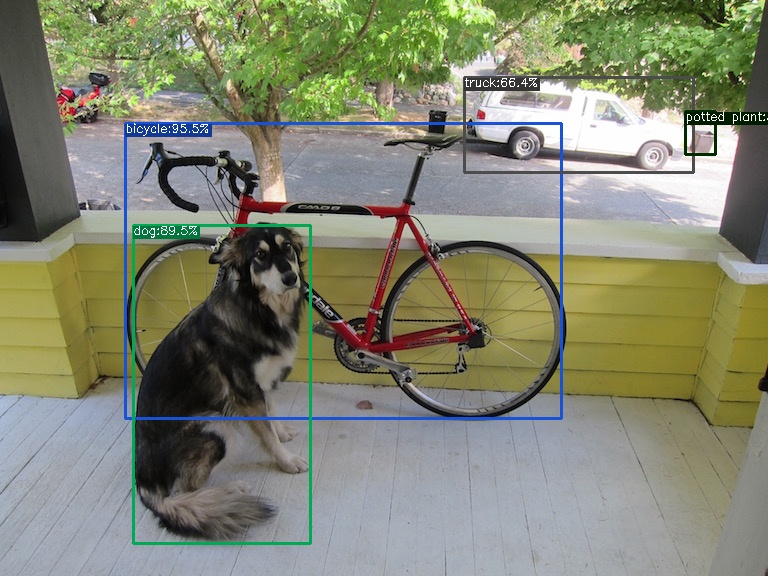

可以得到输出打印

(pytorch) root@bogon:/home/Cambricon-MLU270/YOLOX# python tools/demo.py image -n yolox-s -c weights/yolox-s-unzip.pth --path assets/dog.jpg --conf 0.25 --nms 0.45 --tsize 640 --save_result --device cpu -q int8 -cn 16 -md mlu270 CNML: 7.10.2 ba20487 CNRT: 4.10.1 a884a9a 2022-09-21 15:59:36.773 | INFO | __main__:main:335 - Args: Namespace(camid=0, ckpt='weights/yolox-s-unzip.pth', conf=0.25, core_num='16', demo='image', device='cpu', exp_file=None, experiment_name='yolox_s', fp16=False, fuse=False, legacy=False, mlu_device='mlu270', name='yolox-s', nms=0.45, path='assets/dog.jpg', quant='int8', save_result=True, trt=False, tsize=640) 2022-09-21 15:59:37.231 | INFO | __main__:main:345 - Model Summary: Params: 8.97M, Gflops: 26.96 2022-09-21 15:59:37.233 | INFO | __main__:main:358 - loading checkpoint 2022-09-21 15:59:38.096 | INFO | __main__:main:378 - loaded checkpoint done. 2022-09-21 15:59:39.527 | INFO | __main__:inference:183 - CPU Infer time: 1.3876s cpu outputs: tensor([[1.0374e+02, 9.9162e+01, 4.6693e+02, 3.5099e+02, 9.8331e-01, 9.7071e-01, 1.0000e+00], [1.1192e+02, 1.8571e+02, 2.5850e+02, 4.5805e+02, 9.8379e-01, 9.2798e-01, 1.6000e+01], [3.8575e+02, 6.4014e+01, 5.7842e+02, 1.4313e+02, 6.6523e-01, 9.0992e-01, 7.0000e+00], [5.7019e+02, 9.2405e+01, 5.9696e+02, 1.2823e+02, 5.3170e-01, 8.2378e-01, 5.8000e+01]]) 2022-09-21 16:00:04.251 | INFO | __main__:inference:206 - MLU by Infer time: 21.6487s mlu output: tensor([[1.0452e+02, 1.0290e+02, 4.6576e+02, 3.4685e+02, 9.7900e-01, 9.7168e-01, 1.0000e+00], [1.1068e+02, 1.8592e+02, 2.5926e+02, 4.5286e+02, 9.7998e-01, 9.1504e-01, 1.6000e+01], [3.8656e+02, 6.4331e+01, 5.7586e+02, 1.4425e+02, 7.6172e-01, 9.1455e-01, 7.0000e+00], [5.7089e+02, 9.3422e+01, 5.9616e+02, 1.2817e+02, 3.6182e-01, 7.5781e-01, 5.8000e+01]]) 2022-09-21 16:01:01.747 | INFO | __main__:inference:240 - MLU FUSION AVG Infer time: 0.0591s 2022-09-21 16:01:01.767 | INFO | __main__:image_demo:278 - Saving detection result in ./YOLOX_outputs/yolox_s/vis_res/2022_09_21_15_59_38/dog.jpg

可以看到,cpu 和 mlu 推理结果一致。

cpu 推理耗时 1.3876s, mlu 融合推理耗时 0.0591s

查看图片结果:

3. 220 部署

如果需要在 220 上部署,则在 jit.trace 出 .cambrion 的时候,需要指定 core version 为 MLU220

在

offline_models

得到 .cambricon 模型文件后,可以参考 https://github.com/CambriconECO/Pytorch_Yolov5_Inference

替换推理部分,同时需要参照 tools/demo.py 开发相应的前后处理。

热门帖子

精华帖子